This article is about “Data Cleansing and Data Transformation Benefits / Explanation in Data Mining or Machine Learning Tasks”, hope you will like the information. If yes please do share it with others. Why is cleaning data important for data analysis?, Detailed information on data cleaning and data transformation, What are the benefits of data cleansing and data transformation in data mining or machine learning tasks with examples?, FAQs on data cleaning and data transformation in data mining, machine learning or data analysis tasks, Can you clean data or do data transformation in power bi for data mining related tasks after importing data? etc.

Data cleaning involves removing of incorrect data, removing duplicates, removing corrupt data, taking care of incomplete data etc.

Table of Contents

Introduction / Brief on Data Cleansing and Data Transformation:

Why is cleaning data important for data analysis?

- To avoid duplicates: Suppose there is dataset with duplicate values in it as shown in table below:

| Customer | Sales |

| John | 20 |

| Lynda | 30 |

| Arthur | 40 |

| John | 20 |

Now, in the above dataset the first and 4th row are the same, so if we don’t remove it then on finding pivoting the data we will see sales of 40 for John, whereas it is only 20.

- To avoid errors because of corrupt data:

Hence, to avoid such mistakes it is better to remove duplicates.

| Customer | Sales |

| John | 20 |

| Lynda | 30 |

| Arthur | 40 |

| Jacks | a#% |

In the above example we are trying to show what happens if we don’t push data type constraints.

In the column sales all the values are integers except last one which is an alphanumeric number.

If we try to find sum sales for above table we will face error as we can’t sum up integers and alphanumeric string.

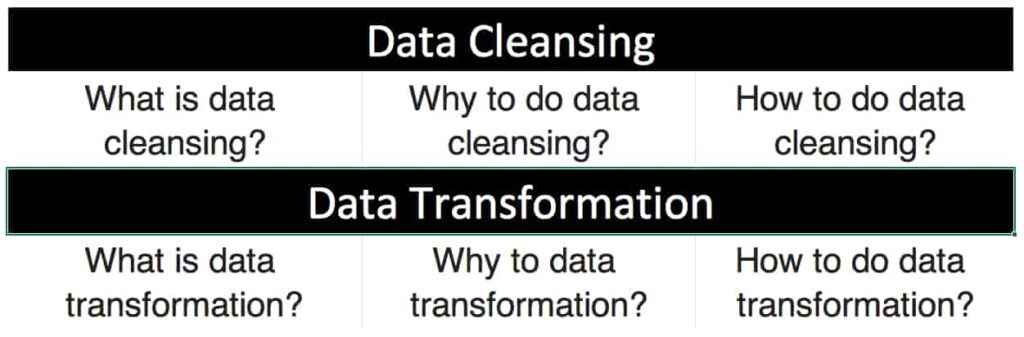

Detailed information on data cleaning and data transformation:

| Question | Answer / Comment |

| What is data cleansing? | Removal or correction of inaccurate, outdated or not so important information that may hamper final results. For e.g. if corrupted data is populated in any column then while running the query it will start showing error, so to avoid that data cleansing is required. Further Read: Guide To Data Cleaning: Definition, Benefits, Components, And How To Clean Your Data |

| Why to do data cleansing? | To get accurate information / insights for decision making purpose. For e.g. If there are too many outliers in your data and those are not properly removed before querying then it will give either too high or too low values, hence in both scenarios you will not get insight that can help you in taking effective decisions. Further Read: – Benefits of Data Cleansing |

| How to do data cleansing? | Some of the things one can do while data cleaning are: – Remove outliers i.e. values that are either too high or too low w.r.t rest of the values. – Remove errors i.e. corrupted data os noisy data present in the dataset. – Substituting missing data by making an educated guess e.g. taking average or making some assumption or filling it with most frequently occurring value. – Removing duplicates and irrelevant information. Further Read: – Guide To Data Cleaning: Definition, Benefits, Components, And How To Clean Your Data |

| What is data transformation? | Converting data from one format to another. For e.g. during machine learning text is converted into numbers using techniques like hashing. Further Read: – What is data transformation: definition, benefits, and uses |

| Why to do data transformation? | – For easy storage for e.g. changing data type to reduce it’s storage size like storing only summary instead of storing each and every data point or logs. – For easy interpretation e.g. converting countries to regions, hence it makes data interpretation easier, because looking data at country level is too much information to digest, whereas decreasing that to certain regions decreases too much information. – To be made consumable by machine learning algorithms e.g. converting text to numbers before running model. Further Read: – Data Transformation: Types, Process, Benefits & Definition |

| How to do data transformation? | Depending on the requirement there are different methods to transform data from one format to another like hashing. |

| Characteristics of quality data | – Valid, accurate, complete, consistent and uniform. Further Read: – 5 Characteristics of Data Quality |

| Can data cleaning or transformation be automated? | Yes, by coding custom rules or by putting algorithms in place this can be achieved automatically. For e.g. you can write a logic that whenever some sales happen from Toronto automatically tag that to North-America region, similarly if sale is happening from Brazil then tagging that to South-America region and so on. |

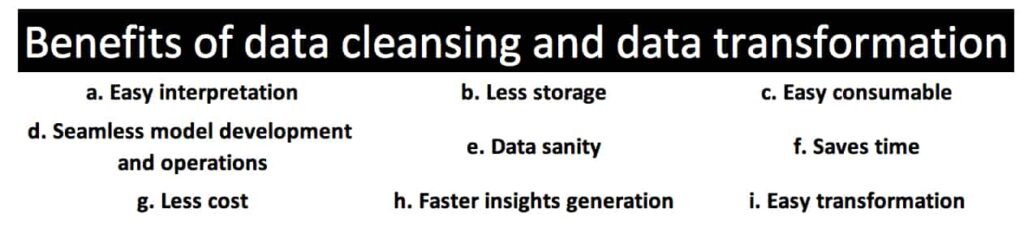

Q. What are the benefits of data cleansing and data transformation in data mining or machine learning tasks with examples?

- Easy interpretation of data: Easy readability by the final users, hence better interpretation of the information and insights generation.

- For e.g. tagging all the country lying in US or Canada or Texas to North America region, the one lying around Saudi Arabia into Middle-East and so on, hence making it more readable.

- Similarly, making buckets for numerical columns like tagging all values >1 mn in one bucket, one lying in 500K – 1 mn into one bucket and so on for easy final summary.

- Less storage required to store data: Less storage required as all the un-useful data is already filtered out.

- Easy consumption of data: More people can consume it easily as it is in easy to digest and interpretable format.

- For e.g. Considering all users lying in less than 18 years age as students in schools, the one lying in 18-24 years of age as college students and so on, making segments like helps in interpreting the business better.

- Seamless model development and operations: Less chances that the further model development on it for information retrieval will face issues or break because of data discrepancy.

- For e.g. If some checks are included in code that if the value is numerical only then the value will be stored in database or only then consider that value else skip it makes sure that the model works seamlessly.

- While developing the model value error or chances of other such errors decreases.

- Easy data sanity check or quality check on data: Easy to find any calculation mistakes in models developed on this data.

benefits of data cleansing and data transformation in data mining or machine learning tasks (contd.)

- Transforming data from raw form to aggregate requires less storage space.

- Saves time for further same types of analysis as cleaning not required every time: Saves time by saving the cleaned data at one place, so one don’t have to juggle for the right logics for extracting the data every time someone is starting to work on it.

- For e.g. suppose before excluding irrelevant rows the dataset was 100K rows and after excluding it the dataset reduces to day 80K rows only, hence 20% less time will be required for data processing.

- Less cost of querying the data due to reduced data size: Reduces cost of querying the data as it reduces the need to query the whole database again and again.

- For e.g. as mentioned in the example above if number of rows to process decreases from 100K to 80K, hence both time as well as requirement of processing bandwidth also decreases, so one take lower configuration machine for this processing and can save cost there.

- Faster insights generation from cleaned data: Insights generation pipeline from raw data becomes really fast when one have cleaned and structured data etc. and even the accuracy of data is good, hence the final insights generated from it are reliable and helps in better decision making

So, this is all about “Data Cleansing and Data Transformation Benefits / Explanation in Data Mining or Machine Learning Tasks”, do let us know in comment section what else you want to read about or some other information you require in the current topic i.e. “How to freeze a row in google sheets”, we are more than happy to help you.

Other relevant links:

- GSM Network

- What type of data transformation is suitable for high Kurtosis data?

- Definition of Data Preparation – Gartner Glossary

- Data Preparation Tool Reviews 2022 | Gartner Peer Insights

FAQs on data cleaning and data transformation in data mining, machine learning or data analysis tasks:

Q. What is meant by data transformation?

Ans. Data transformation is the process of converting data to other format or in general terms to more usable format, where use depends from case to case for e.g.

- Data normalisation is required machine learning model fitting,

- For e.g. converting any numerical column values in the range 0-1 rather than exact values.

- Converting text to numbers to be used as input for data science models as machine can only understand numbers and not text etc.

- For e.g. creating a column called as country code and tagging all the countries lying in US as 01, the one lying in South America as 01, the one lying in Europe as 03 and so on.

Q. Date transformation excel (google sheets date format formula dd/mm/yyyy).

Ans. Date in excel can be transformed to other format using the “format option” available in top bar. Refer the screenshots below for more clarity where it is present and how to use that.

So, one can format date to dd/mm/yyyy, mm/yy, dd/mm/yy, dd.mm.yy format and so on. There are many other relevant formats available that you can use based on your requirement.

Q. Can you clean data or do data transformation in power bi for data mining related tasks after importing data?

Ans. Yes it’s quite possible by writing custom logics one can do it. Also, power bi is a good tool where you can easily visualise your data problem so it becomes easy to identify problem and rectifying it.

For e.g. we have already quoted this several times in this article how one can allow

- Data in range(a, b): Only values that are greater than value 1 and lesser than value b, so in power BI one can write custom logic and apply this,

- IF-Else Logic: Writing if-else logic for creating buckets like if sales > 1 mn then ‘1 mn +’, if lying in 500K – 1mn then ‘500k – 1 mn’ else ‘<500 K’ etc.

- Data Bucketing: For data cleaning making tables and seeing how many values are lying in wrong bucket we can estimate where and how much data cleaning is required.

So, based on above example we can say that yes this is quite possible.

Data cleaning process in machine learning using sql python (Data wrangling)

There are not as such any standard process or steps that one can follow for data cleaning, but having a template in place makes sure that while working all the necessary sanity check are done for e.g.

- Check for corrupt data because that can interfere with final code,

- Check for duplicates in data as not removing it will give incorrect results for e.g. suppose same sale is present in data multiple times then while summarising the data it will come multiple times and exaggerate the final sales amount number which is not correct, hence always look for removing the duplicates.

- Find null values or incomplete values and replace it with average or most frequently occurring values, because suppose if you are taking average of any column and most of it’s values are null then the final average will come less than of what it should be.

- Remove outliers: Check if the values are lying in certain range or not and remove too high or too low values.

Hope you liked this article “Data Cleansing and Data Transformation Benefits / Explanation in Data Mining or Machine Learning Tasks”.

4 thoughts on “Data Cleansing and Data Transformation Benefits / Explanation in Data Mining or Machine Learning Tasks”